Albert Tort

KCycle: Knowledge-Based & Agile Software Quality Assurance

An approach for iterative and requirements-based quality assurance in DevOps

Agile paradigms and DevOps approaches have changed software engineering processes by focusing on iterativity, communication and collaboration between different project roles. These approaches are aimed at reducing time-to-market and increasing the frequency of continuous applications delivery. In this context, testing and Quality Assurance activities must have a central role for ensuring the quality of the delivered pieces of software.

Moreover, even in agile, requirements engineering is essential in order to ensure the global quality of the delivered software by taking into account the big picture. Consequently, traditional ways of managing requirements and the associated Quality Assurance activities aimed at testing such requirements need to be rethought in order to fit with the properties of current agile approaches. In this paper, we present KCycle: an end-to-end approach for iterative Quality Assurance in agile and DevOps projects, taking enhanced user stories as a base for driving the requirements engineering practice in this context. This approach promotes continuous quality gates, structured user stories and acceptance criteria for generating test cases, agile testing reporting and innovative knowledge-based and iteration-by-iteration metrics for project management and governance.

1. Introduction

According to the agile manifesto [1], “Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.” In other words, agile approaches promote reducing the time-to-market and providing continuous releases. In this context, agile paradigms and DevOps [2] approaches have also raised the necessity for agile requirements engineering and agile testing practices, in order to check and measure the value of the delivered software. Consequently, from a Quality Assurance (QA) perspective, agile practices need to include frequent quality gates in order to perform continuous validation checks before releasing software pieces. In other words, speed needs a balance with quality checks.

In agile approaches, the number of iterations increases and divide-and-conquer strategy practices imply the necessity to rethink how to address testing in comparison with traditional testing practices, going further than conceiving testing as an isolated and later activity in the software engineering process. Test design (the specification of detailed test cases), execution and reporting activities need to be rethought and adapted to iterative, continuous and agile checks to be applied in each iteration as early as possible.

Furthermore, it is necessary to have an analysis view of the big picture [3] of the software being delivered, taking into account the accumulated knowledge after each specific iteration. In order to obtain general quality indicators, it is necessary to generate, update and maintain evidence and iteration-by-iteration metrics for better project management.

In order to address these challenges, testing roles need to be considered an active part of the software engineering process, taking into account the necessity of collaboration and promoting inclusive communication strategies. This approach follows the agile principle “Business people and developers must work together daily throughout the project”, but including also testing & QA roles. We also promote adapting communication to each project context, going further than considering that “The most efficient and effective method of conveying information to and within a development team is face-to-face conversation”. In many projects with distributed teams, variable people availability, etc. we need to include complementary written communication artifacts as a means to reduce ambiguity and requirements misunderstandings that may become conflicts with economic impact. User stories are commonly accepted agile requirements artifacts. User stories are short, simple descriptions of a feature told from the perspective of the person who desires the new capability. Moreover, user stories are usually complemented with acceptance criteria, which define a set of statements that represent conditions of satisfaction. If such user stories are enhanced with additional information, then they may serve as a base for explicit communication between developers and testers, and even for making possible the automatic generation of test case designs at each agile iteration.

In this paper, we present KCycle, an approach for iterative QA in agile and DevOps projects. This approach promotes continuous quality gates, structured user stories, gen-eration of test cases from structured user stories and configurable coverage criteria, agile testing reporting facilities, documentation generation and – through an iteration-by-iteration analysis dashboard – innovative knowledge-based metrics for enhancing project management in agile environments.

2. The Approach

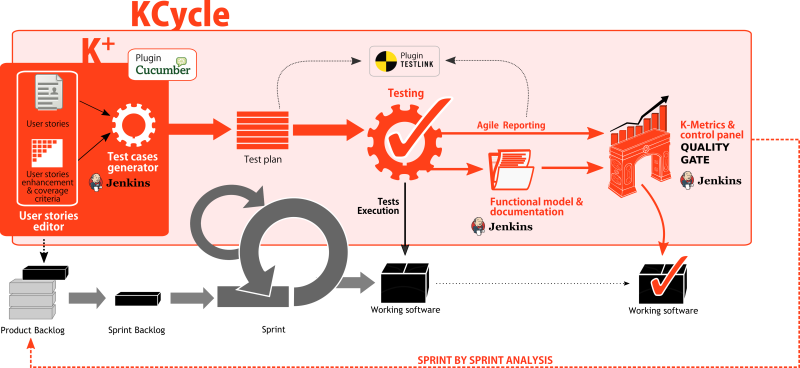

In Fig. 1 an overview of the main components of the KCycle approach is illustrated. KCycle is an approach that focuses on three main objectives: (1) setting up quality gates for iterative and continuous validation checks across the agile [4] process, (2) facilitate communication between development, operations and testing roles, even if they are not connected face-to-face due to time and location restrictions, and (3) enhancing management in the context of agile iterations without losing the big picture of the project from a knowledge management perspective.

The main components of the approach are:

-

Test cases generation from semiformal user stories (K+). This component uses as input enriched semiformal user stories with their acceptance criteria in order to automate the generation of test cases given a set of coverage criteria. User stories and acceptance criteria need to be written in Gherkin-Cucumber [5] or using a specific K+ editor. This component contributes to the agility of testing in DevOps contexts, since the design time of test cases may be considerably reduced by making the most of the knowledge contained in the user stories for many purposes. The result of K+ is a set of test designs in machine-generated natural language, which can be visualized in HTML format or exported to Test Management systems. Nowadays, the export has been implemented to be compatible with TestLink [6] and Microsoft Excel.

-

Agile Testing Reporting. Test cases generated by K+ may be executed manually or automatically depending on the project objectives and characteristics. The result of executing a test case is a testing verdict which may be Pass, Fail or Error. In case of automated execution, the Gherkin language allows implementation of the specified behavior. KCycle includes a lightweight procedure to report the execution of test cases in case the particular agile context does not use a full specific test management system. This characteristic is aimed at reducing the complexity of test reporting in agile teams if no specific test management system is used. Nevertheless, the presented approach also includes compatibility functions to report testing verdicts into test management systems. Currently, reporting into TestLink is supported. Integration with other tools is in progress.

-

Functional model and documentation generation. From generated test cases, the proposed approach includes the generation of a functional documentation that accumulates the specification of the big picture of the project in terms of functional knowledge represented as concepts, relationships and operations. The generation of the functional model and the documentation may be triggered in each agile iteration. These models have two objectives: (1) They serve as a communication base for system knowledge, and (2) they are the base for computing management metrics based on the knowledge that is considered in each iteration.

-

K-Metrics. The approach includes a control panel for managing the project, based on existing metrics such as the number of test cases, passing or failing test cases,… Moreover, we include the concept of K-Metrics which compare, iteration by iteration, knowledge data related to the number of concepts, relationships and functionalities which are covered by tests.

-

Continuous integration & delivery. The generation of test cases from user stories, the export of test cases to test management systems and the computation of K-Metrics may be triggered by using automation servers like Jenkins [7].

Agile reporting, functional model and documentation generation, K-Metrics and continuous integration support are complementary components that enrich K+ to become KCycle, an integral approach for agile projects focused on continuous quality assurance.

3. Automatic Story-Driven Generation of Test Cases

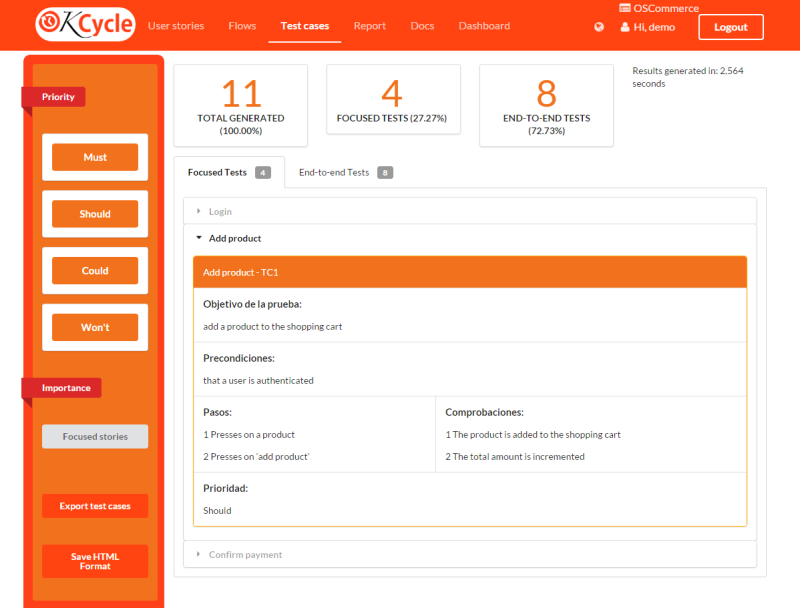

In this section, we describe the K+ component. This component takes as input development user stories enriched with flow sketches and priority indicators (see Section 3.1). Then, a four-step generation algorithm (see Section 3.2) is used in order to automatically generate the following artifacts:

- A set of test case designs which include auto-generated natural language test case steps and assertions.

- A filtered set of test cases to be executed according to available resources and coverage objectives.

Test cases are shown in HTML format with dynamic filtering and hyperlink navigation. Test cases can also be exported to Microsoft Excel (see Fig. 2) or to other test management systems. Currently, test cases can also be exported to TestLink [8]. The implementation of the export to other test management systems and other generation formats is straightforward.

| Test ID | Objective | Preconditions | Steps | Checks | Priority |

|---|---|---|---|---|---|

| Add product | add a product to the shopping cart | that a user is authenticated | 1. Presses on a product 2. Presses on 'add product' |

2. The product is added to the shopping cart 2. The total amount is incremented |

Must |

3.1 Enhancing User Stories for Agile Testing

User stories are commonly-used requirements artifacts in agile contexts. They are short and simple descriptions of features from the point of view of a user or customer of a system. Such stories are usually complemented with acceptance criteria that define satisfaction conditions of the stories.

In order to limit the expressivity of user stories, and consequently reducing its ambiguity, several proposals promote specifying them in a semi-structured form. In [9], it is proposed to define user stories by using structured sentences according to the following pattern:

As a

For example, in the context of an online e-commerce system, an agile professional could write the following user story:

As a customer, I want to purchase items so that I can buy products online.

In order to add structured format to user stories, there are also purpose-specific languages like Gherkin, which is a business-driven development language that is focused on specifying expected business behavior. This language can be used for specifying user stories and their acceptance criteria. Fig. 3 shows a fragment of a user story specified in Gherkin.

Furthermore, general user stories that cannot be addressed in a single iteration (generally called Epics) need to be decomposed into smaller ones that are implementable in a single agile iteration. For example, in order to develop an online purchase, we should be able to log in to the system, add items to the shopping cart, check-out, etc.

From a tester perspective, user stories and their acceptance criteria are useful to derive unit test cases that test each user story. However, in order to design end-to-end test cases, there is not enough explicit information, since there is no specified knowledge regarding the possible flows of user stories that implement more general user stories. In order to fill this knowledge gap, we propose drawing sketch flow diagrams that combine user stories in order to obtain the functionality of more general ones. Fig. 4 shows an example of a flow diagram, in which the possible flows of stories that realise the epic “As a customer, I want to purchase items so that I can buy products online” are sketched.

In the following sections we present how to use structured development stories in order to automatically generate a set of test cases.

3.2 Four-Step Generation of Test Cases

In Fig. 5, we illustrate the four-step generation algorithm that implements the generation of test cases. In the following, each step is described.

End-to-end paths discovery. In this step, we take as an input each flow sketch in order to compute the end-to-end paths to be tested. In the case that a user story is atomic (i.e. not decomposed into other simpler stories with an associated flow sketch), this step is skipped.

In our implementation, we use a composed algorithm that combines the detection of strongly-connected components (using the Tarjan algorithm [10]) and a Breath-First Search strategy to compute all possible end-to-end test cases. The algorithm guarantees full coverage of all possible sequences of steps. The detection of strongly-connected components avoids infinite loops by ensuring that cycles are traversed only once when deriving test case paths. Each path is a valid sequence of user stories.

Computing unit test cases based on acceptance criteria. In this step, we take as input each user story and its acceptance criteria. Each acceptance criteria is the base to construct a unit test case. Given clauses correspond to test case preconditions, while When clauses and Then clauses are the base to generate steps and test case assertions. Fig. 6 shows an example of a unit test case chunk based on the acceptance criteria “Valid Login” specified in Fig. 3.

Logical test cases generation. In this step, we take as input the paths generated in the first step and the unit test cases generated in the previous step. The aim is to compute end-to-end test cases by combining each path with all possible available scenarios, without considering (yet) example data. The result is a set of end-to-end-test cases, like the fragment shown in Fig. 7.

Physical test cases generation. This step is only applicable for those input acceptance criteria that specify example data. The input is such data and the logical test cases obtained in the previous step. This step performs an additional level of combination, taking logical test cases and combining them with different test data.

The set of test cases that result from the described procedure is the set of all possible test cases to be executed. However, we may not have enough time and resources to test all possible test cases. Therefore, K+ allows the whole set of test cases to be filtered according to different criteria such as:

-

Test case priority. Taking into account the priorities set for each user story, a priority value for each generated test case is computed. Then, a set of importance values (High, Medium, Low) to be included in the resultant set of test cases may be specified. Those test cases that are not included in this range are not part of the filtering results.

-

Specific user stories to be included. A set of specific user stories for which we want to focus the filtered test set may be specified. Only those derived test cases which are aimed at testing the specified stories are included in the filtered set.

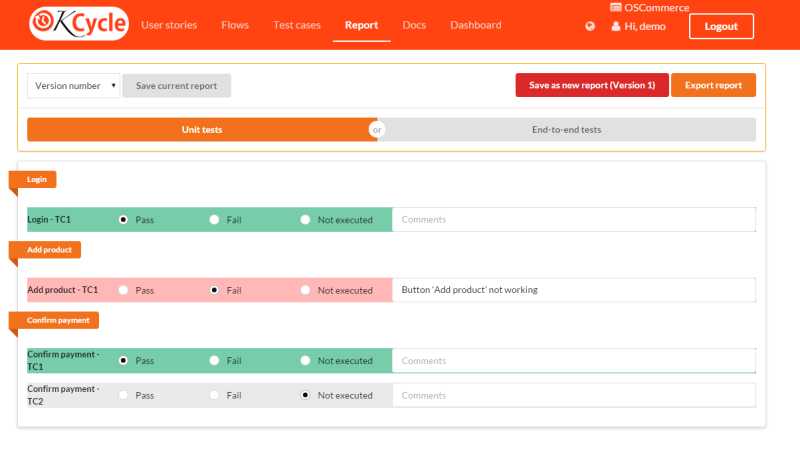

4. Agile Test Cases Execution and Reporting

Test cases generated by following the procedure described in Section 3 can be executed either manually or automatically. Nevertheless, regardless of the execution procedure, the verdicts of test cases need to be reported in order to be part of the KCycle ecosystem. In order to specify the reporting in an agile way, KCycle includes a simple, iteration-by-iteration reporting mechanism (see Fig. 9). The test cases reporting information feeds the input data used by some metrics defined in Section 5. Test case results can also be exported to test management systems like TestLink.

5. Iteration-Based Documentation Generation

The user stories described in Section 3.1 may be formalized by using a restricted syntax as illustrated in Fig. 10. If stories are specified by following this language, the ambiguity is reduced and the following automatic features can be applied in the context of KCycle:

-

Test-Driven Generation of a functional model. It uses the engine provided in [11] in order to obtain a living model that evolves as generated test cases change. The obtained model specifies the concepts, relationships and operations that are derived from use cases, which in turn are derived from user stories. The model is specified by using the Unified Modeling Language (UML). Fig. 11 shows a fragment of an output model of the knowledge related to online orders. In this way, in each agile iteration the model is evolved by adding the new knowledge. The model is a knowledge repository that represents the big picture of the project in terms of functional knowledge components.

-

Automatic generation of documentation. The obtained model can be structured into auto-generated documentation that can be exported to several formats (HTML website, PDF file, textual OpenDocument file, ArgoUML diagrams,…).

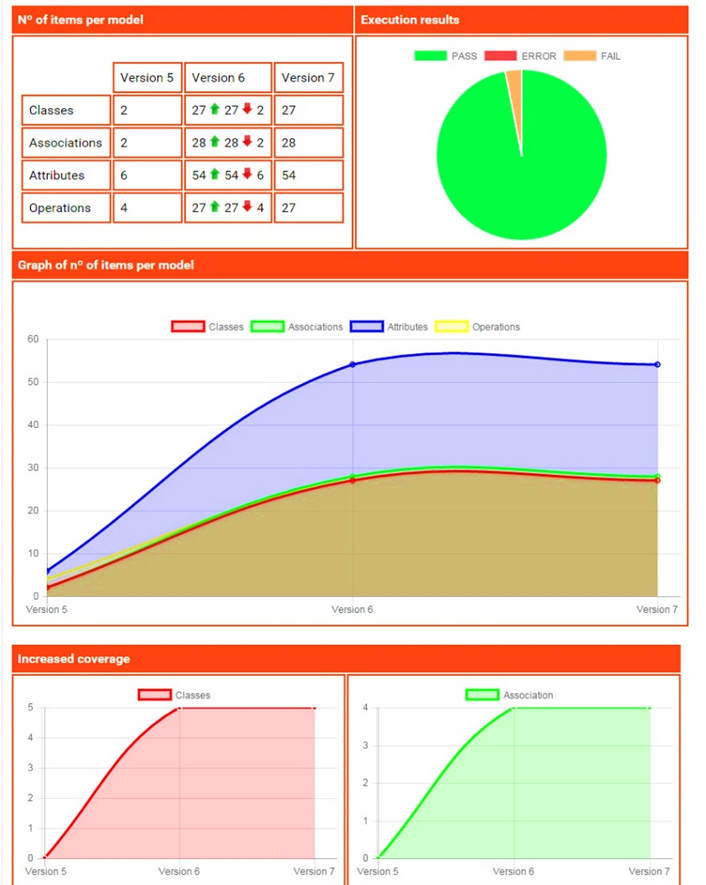

6. Knowledge-Based Metrics for Agile Management

KCycle includes a project management dashboard aimed at supporting the analysis and governance of agile projects. Fig. 12 shows a screenshot of some dashboard metrics. The dashboard presents graphical information that takes into account the following input data:

- User stories and acceptance criteria for each agile iteration (see Section 3.1)

- The generated set of test cases in each agile iteration (see Section 3.2)

- The execution verdicts of each test case for each agile iteration (see Section 4)

- The functional knowledge (concepts, relationships and operations) specified in the UML model generated for each agile iteration (see Section 5)

From this information, KCycle computes several metrics that relate user stories, test cases, test case results and the associated functional knowledge. This practice allows the computation of innovative metrics that provide various information. For example, it computes the productivity of test cases according to the knowledge they test, or the productivity of each agile iteration in terms of tested concepts, relationships and operations. Such metrics, which are called K-Metrics, rely on the knowledge model. Furthermore, such metrics are computed for each agile iteration and compared amongst them for analyzing the evolution of development and testing activities.

7. KCycle in DevOps

As illustrated in Fig. 1, there are four automatic generation facilities of KCycle: the generation of test cases, the generation of the model, the generation of documentation and the computation of analysis metrics.

If such features are automatically triggered according to the approach depicted in Fig. 1, then they cover the full cycle (KCycle) presented in the approach. These components may be integrated in a DevOps environment by using continuous integration facilities like Jenkins [7].

The purpose of such integration is to provide a quality-focused approach that includes the main agile activities by incorporating the testing perspective into a DevOps environment.

8. Conclusions

In this paper, we have presented the overview and the main components of KCycle, a quality-focused approach for enhancing agile activities in the context of DevOps environments. The presented approach includes many features that, when combined, may offer an environment with the following advantages: (1) The K+ component reduces the time spent for the design of test cases at each agile iteration, since a set of test cases is automatically computed from enriched user stories, (2) if user stories are defined using a semiformal syntax, then a model can be automatically obtained by a test-driven engine, (3) the reporting of test case executions can be specified into the solution or managed by external test management systems, (4) functional documentation may be generated from the model at the end of each agile iteration in several formats, (5) a quality management dashboard is provided by including knowledge-based metrics that combine several data sources of the environment (user stories, generated test cases, test case verdicts, model evolutions at each iteration,…).

Currently, the integration with other test management tools is only implemented with Test Link and the integration with other similar tools is a work in progress. Furthermore, the export of generated test cases is limited to the use of the algorithm described in Section 3, although other algorithms could be implemented and added to the solution. Similarly, generated test cases are exported to HTML format, but the presentation of output test cases as word processing files, for example, is straightforward.

In summary, the KCycle approach contributes to the challenge of enhancing the quality assurance activities in the context of current agile practices, by reducing the testing design effort and making the most of knowledge models to provide innovative management metrics.

References and Literature

- [1] Principles behind the Agile Manifesto. Available at: http://agilemanifesto.org/principles.html

- [2] Hüttermann, M.: DevOps for developers. Apress (2012)

- [3] Pohl, K.: Requirements Engineering: Fundamentals, Principles, and Techniques. Springer (2010)

- [4] Martin, R.: Agile software development: principles, patterns, and practices. Prentice Hall PTR (2003)

- [5] Wynne, M.: The cucumber book: behaviour-driven development for testers and developers. Pragmatic Bookshelf (2012)

- [6] TestLink Open Source Test Management. Available at: http://testlink.org/

- [7] Jenkins: An extendable open source automation server. Available at: https://jenkins-ci.org/

- [8] TestLink Open Source Test Management. Available at: http://testlink.org

- [9] Cohn, M.: User Stories Applied: For Agile Software Development. Addison Wesley (2004)

- [10] Nuutila, E.: On finding the strongly connected components in a directed graph. (1994)

- [11] Tort, A.: The Recover Approach. Available at: https://re-magazine.ireb.org/issues/2015-1-ruling-complexity/the-recover-approach/

Albert Tort is the Research&Innovation Solutions Lead in Sogeti Spain. During the last years, he has been working as Quality Assurance and Software Control & Testing specialist in several projects for customers in different sectors (banking, airlines, insurance, procurement, etc.). Furthermore, he has been the lead of research&innovation projects developed at Sogeti. He was the winner of the “Capgemini-Sogeti Testing Innovation Awards 2015” for the submission of the best individual entry "Recover: Reverse Modeling and Up-To-Date Evolution of Functional Requirements in Alignment with Tests. He is also a member of the SogetiLabs community.

Previously, he served as a professor and researcher at the Services and Information Systems Engineering Department of the Universitat Politècnica de Catalunya-Barcelona Tech. As a member of the Information Modeling and Processing (MPI) research group, he focused his research on conceptual modeling, software engineering methodologies, OMG standards, knowledge management, requirements engineering, service science, semantic web and software quality assurance. Currently, he is also the coordinator of the postgraduate degree in Software Quality Assurance of the School of Professional & Executive Development of the Technical University Of Catalunya (BarcelonaTech).

He obtained his Computer Science degree in 2008 and he received his M.Sc. in Computing in 2009. In 2012 he presented the PhD thesis “Testing and Test-Driven Development of Conceptual Schemas”. He is the author of several publications in software engineering journals and conferences.