Luisa Mich

Interview with John Mylopoulos

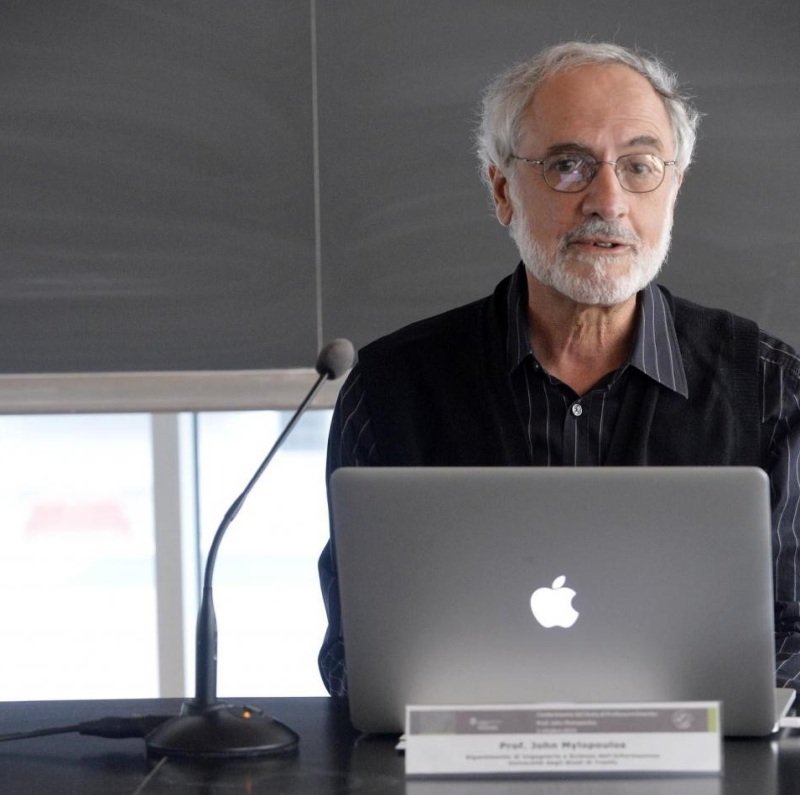

Views of a real RE pioneer

Preface of the Editor in Chief

Perhaps it is like carrying coals to Newcastle when we briefly introduce John Mylopoulos in this preface to the following interview.

Luisa Mich, member of our editorial board, asked John some questions about RE.

Most readers will have read some of (link: https://scholar.google.de/scholar?hl=de&as_sdt=0%2C5&q=John+Mylopoulos&btnG= text: John’s numerous publications popup: yes) or even met him at an RE event.

John is a pioneer of Requirements Engineering, with special focus on conceptual modeling, and can undoubtedly be regarded as one of the great fathers of RE.

Until his recent retirement he spent almost 50 years researching and teaching in RE and related fields. With this experience behind him, he explains why he is convinced that certification in RE is crucial as well as how he perceives Artificial Intelligence (AI) in the RE context.

Stefan Sturm

Luisa Mich: John, can you please briefly describe your RE background?

John Mylopoulos: I am professor of Computer Science at the University of Toronto since 1970, now retired. For the past 49 years, I have conducted research in Artificial Intelligence (AI), Databases and Software Engineering (SE), specifically Requirements Engineering (RE). My involvement in RE research started in 1978 when I was asked by a prospective PhD student, Sol Greenspan, whether I’d be willing to work on a formal specification language for requirements. I agreed, and the result of our efforts was one of the first formal requirements modelling languages (RML), presented at ICSE 1982. This was followed by a new proposal for an extensible requirements modelling language (Telos) that could be extended to support requirements and domain modelling and analysis for different domains (e.g., cyber-physical systems, socio-technical systems). Our research on RE continued with a proposal for modelling and analyzing non-functional requirements as “softgoals”: goals that have no clear-cut criterion for their satisfaction. In the same period (early 90s), we proposed a requirements modelling language (i*) that viewed requirements in a social setting consisting of actors with associated goals who depend on each other for the fulfilment of their goals.

Objectives and scope of the discipline: have they changed?

I think not. The main objective of RE was, and remains, to develop concepts, tools and techniques for eliciting, modelling, and analyzing stakeholder requirements to produce a requirements specification that is complete, unambiguous, valid and consistent. What has changed is that today we distinguish between stakeholder requirements that describe stakeholder needs or goals, and functional and quality requirements, which make up a requirements specification for the system-to-be. The scope of RE has also broadened to include new classes of requirements, such as legal requirements derived from laws and regulations, security and privacy, adaptation requirements for adaptive systems, and more.

Open problems: which are the most critical issues?

I will mention two. Firstly, we do not have yet a comprehensive framework for tackling the requirements problem, i.e., a set of concepts, tools and techniques that support the elicititation, modelling and analysis of stakeholder requirements to produce a requirements specification that is complete, unambiguous, valid and consistent. Although there has been progress on specific aspects of such a framework, we are not there yet, by a long shot. Secondly, even though there is ample evidence that RE constitutes the riskiest phase of software development and the cause of most failed projects, there is still resistance to paying proper attention to this phase in Software Engineering practice. This has resulted in failed projects that constitute an embarrassment to our profession. For the latest such fiasco in Canada, check the Phoenix Payroll Project: This is a system whose original development project was costed at less than CAN$5M, has cost the Government of Canada almost CAN$1B so far, and will be scrapped altogether once a replacement is available. And remember, the most popular software development technique, agile, only pays lip service to RE and doesn’t work at all for non-functional requirements.

Certifications: is RE certification relevant?

Given the problems noted above with respect to RE practice, I think that certification needs to be made mandatory for practicing requirements engineers. If we are to take seriously the ‘Engineering’ part of RE, we should insist that we do what all other engineering disciplines do: make certification a requirement for RE practitioners.

AI challenges: are they changing the role of RE?

AI systems, such as autonomous vehicles, autonomous weapons and robot-doctors do not change the role of RE, only its scope. Such systems call for new classes of requirements that need to be studied and analyzed by RE researchers so that we develop techniques for operationalizing them. Among the new classes, I note ethical requirements which are derived from ethical principles. For an autonomous vehicle, these might include being considerate to nearby vehicles and pedestrians. For an autonomous weapon, on the other hand, these would include not firing at non-combatants. Such requirements would be operationalized in terms of new functions, such as being able to recognize a nearby vehicle, pedestrian or a non-combatant, along with dos and don’ts on what can be done with instances of each class. Fairness requirements constitutes another class of requirements for machine learning (ML) systems that make sure that no class of users in discriminated against on the basis of race, religion etc. This is a critical issue for ML systems because what they learn is based on the training data the system was trained with. Explainability requirements for ML systems are a special class of transparency requirements that is also critical and hinges on the fact that such systems learn in terms of probabilities on patterns of raw data, rather than higher-level concepts. This means that a face recognition ML system has a model of the face(s) it recognizes as probability distributions on arrays of pixels, rather than in terms of shapes of constituents of a face, e.g., eyes, mouth, nose, etc. This means that the ML system cannot give a conceptual description of a face, and therefore cannot explain why a particular pixel pattern is/isn’t that face. In conclusion, I think that RE has a huge role to play in the design of AI systems. After all, such systems are still software (more precisely, cyber-physical) systems.

Thank you for having shared your thoughts with us.

- [1] Source of the photo: webmagazine.unitn.it

Luisa Mich is an Associate Professor of Computer Science at the University of Trento, Italy. Her research interests include requirements engineering, creativity and web strategies. She is an author of more than 150 papers.