Michael Mey

AI Assistants in Requirements Engineering | Part 1

Introduction and Concepts

Artificial Intelligence (AI) is playing an increasingly critical role in various industries, and Requirements Engineering (RE) is no exception. Large Language Models (LLMs) are AI systems trained on vast amounts of text data that can understand, generate, and manipulate human language with remarkable capability. With the advent of advanced LLMs like ChatGPT, Copilot, Claude, Llama, Perplexity, and Gemini, professionals in this field have new tools at their disposal to improve efficiency, accuracy, and overall quality in their work. These AI systems can support tasks ranging from writing user stories to automating documentation, making the complex process of gathering and managing requirements more streamlined and effective.

Introduction

There are numerous use cases where AI can significantly reduce the time and effort required to complete tasks while ensuring higher consistency and quality. Whether it’s drafting precise requirements, reviewing documents, or generating test cases, the potential applications are vast[1]. In software development, AI-assisted code completion and automated code reviews can increase development speed and improve overall code quality [2]. Additionally, in areas like customer operations and marketing, AI can enhance productivity by up to 45% through faster response times and more personalized interactions [3].

Disclaimer: Please note that the specific technologies discussed in this article are evolving rapidly, but general principles and concepts should apply for longer.

This article, the first in a two-part series, will introduce you to the concept of AI assistants in Requirements Engineering, explain the art of effective prompting, and explore the potential of AI assistants.

- The Art of Effective Prompting:

Learn the fundamentals of prompting these models to get the most relevant and accurate responses: a crucial skill for leveraging AI effectively. - AI Assistants:

Understand the importance of encapsulating detailed prompts and related documents into a specialized AI assistant, ensuring consistency and efficiency in your interactions. - Understanding CustomGPTs:

Get an overview of what CustomGPTs are and how they can be leveraged in Requirements Engineering. - Benefits of Creating Your Own CustomGPT:

Explore the advantages of tailoring AI assistants to your specific needs and workflows.

The Art of Effective Prompting

Prompting an LLM is fundamentally different from traditional keyword-based search engines like Google. When you use Google, you typically enter brief, specific keywords (like "best restaurants NYC" or "fix printer error 401") to find relevant web pages. In contrast, prompting an LLM is more like having a conversation with a knowledgeable assistant. You can use natural language, provide context, ask follow-up questions, and even set specific roles or parameters for how the AI should respond. For instance, instead of searching "requirements document template", you might tell an LLM: "Act as a senior business analyst and help me create a detailed requirements document for a mobile banking app, focusing on security features". This conversational, context-rich approach allows for more nuanced and targeted assistance.

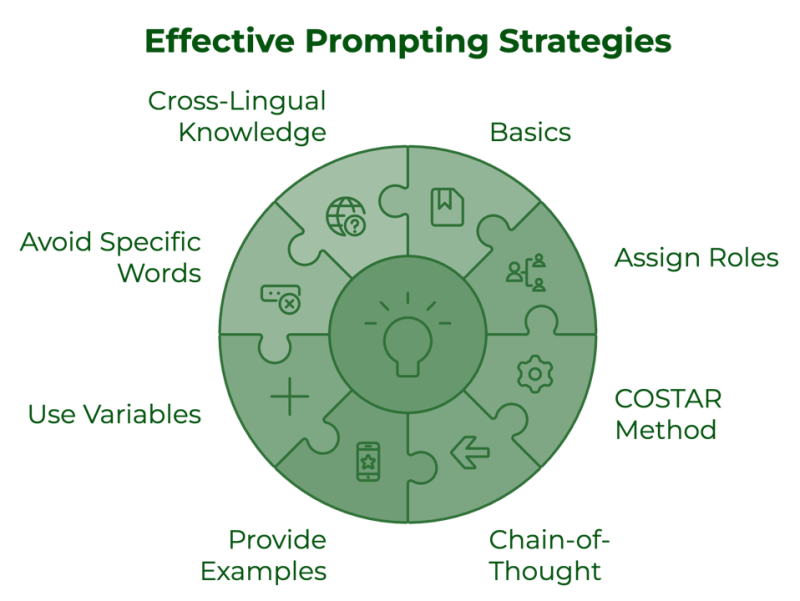

Prompting is the cornerstone of interacting effectively with AI tools. It’s the bridge between your intention and the AI's output, and mastering this art can significantly enhance the quality of the responses you receive. In this chapter, we’ll look into several key strategies for crafting effective prompts, including roles, the CO-STAR method, chain-of-thought prompting, and other advanced techniques.

Basics of Prompting

At its core, prompting is about clear communication. The AI relies entirely on the information you provide to generate its responses. In a way, writing prompts is like writing requirements: it’s crucial that your prompts are clear, concise, and contextually rich. Here’s why:

- Clarity ensures that the AI understands exactly what you’re asking for, reducing ambiguity.

- Context helps the AI generate responses that are relevant and aligned with your expectations.

- Specificity guides the AI to deliver precise results, rather than broad or unfocused answers.

Assign a Role

Interacting effectively with large language models (LLMs) initially gained popularity with prompts starting with "Imagine you are [CHARACTER/PERSON]", as a way to set the stage for the AI. While this trend worked well, you can omit the word "imagine" and still get the same results. For instance, instead of saying "Imagine you are," you can directly instruct the model by stating, "You are an expert in Requirements Engineering and Business Analysis with decades of experience in the pharmaceutical industry.”

This method helps the LLM focus its responses more effectively by simulating a deep understanding of the context and domain. By giving the AI clear, specific roles, the answers are more tailored to the context of your task, such as drafting precise requirements or providing insights relevant to niche industries like pharmaceuticals. This also minimizes ambiguity and ensures that the AI generates responses with a more focused and professional perspective.

You can mix and match roles to create a unique character that doesn't exist in real life, such as a Requirements Engineer with the investment expertise of Warren Buffett, the creativity of Ernest Hemingway, and the persuasive copywriting skills of Dan Kennedy.

The CO-STAR Method

When it comes to structuring prompts effectively, there are numerous frameworks available to guide your approach. Frameworks like CO-STAR, RTF, RISEN, RODES, or TIGER [7] ,[8] offer different ways to ensure comprehensive and effective prompts. These frameworks serve as helpful reminders to provide sufficient context to the AI, rather than treating it like a search engine where brief keywords suffice.

The key principle across all frameworks is to be verbose and detailed in your communication with AI. Unlike traditional search engines where brevity is preferred, AI systems benefit from rich context and clear specifications about what you want to achieve.

In this article, we'll explore the CO-STAR method [4], as one example of these frameworks. Let's look at how it breaks down a prompt into six essential components:

- Context:

Start by setting the stage. For instance, instead of simply asking, "How do I bake a cake?" you might begin with: "We’re planning a birthday party and need to bake a cake". This background information allows the AI to understand the scenario and provide more relevant advice. - Objective:

Clearly define what you want the AI to accomplish. For example: "Please list the best birthday cakes for kids". This directs the AI to focus on a specific task, ensuring that its response aligns with your goal. - Style:

This is where you dictate how the AI should ‘speak’ in its response. Whether you want the tone to be formal, casual, or even emulate a specific personality, defining the style helps tailor the output to meet your expectations. - Tone:

Similar to style, tone controls the emotional or conversational vibe of the response. Whether you prefer a serious, professional tone or a lighthearted, fun one, setting this parameter can make the AI’s response more engaging or appropriate for the context. - Audience:

Knowing your audience is critical. Whether the response is meant for children, professionals, or beginners, specifying the audience ensures that the AI adjusts its language and complexity accordingly. - Response Format:

Finally, decide how you want the information presented. Whether it’s a simple list, a detailed paragraph, or something structured like a JSON format, defining this upfront ensures the output meets your practical needs.

Chain of Thought Prompting

Step-by-step prompting involves instructing the AI to "think step-by-step" as it processes your request. This directive encourages the AI to break down its reasoning into smaller, logical components, which often results in more accurate and thoughtful responses. By prompting the AI in this manner, you guide it to approach complex tasks in a methodical way, reducing the likelihood of errors and improving the quality of the output.

For example, instead of asking "What is the best strategy for launching a new product?", you might literally prompt:

What is the best strategy for launching a new product. Think step-by-step.

This approach leverages the AI’s reasoning capabilities, ensuring that each part of the task is addressed thoroughly.

Provide Examples

Providing examples is a powerful way to guide the AI toward the desired output. By showing the AI what you expect, you significantly increase the likelihood of receiving a response that meets your needs.

Example prompt: Input: "What are the benefits of remote work?" Output: "Remote work offers flexibility, reduces commute times, and can lead to a better work-life balance."

Input: "What are the challenges of remote work?" Output: "Challenges include potential isolation, difficulty in separating work from personal life, and potential distractions at home."

Input: "What is a good approach to remote work?" Output:

This would instruct the AI to provide an answer to the third Output statement.

Using this format, you can create a pattern for the AI to follow, ensuring consistency in its responses.

Use Variables

A prompt can be structured by using variables that you reference in the prompt for easier reading and reuse. At the bottom, you define your variables and their values.

Variables can be formatted using different conventions such as JSON notation, quotation marks, or markup-style tags, provided the chosen format is maintained consistently throughout the prompt.

Combining variables with sample text enables you to set specific parameters that guide the AI, particularly for tone, style, or content structure.

Example prompt: When you write a text, stick to the tone in SAMPLETEXT.

<SAMPLETEXT>>

This product is designed for professionals who value precision and efficiency in their work. </SAMPLETEXT>

This structure not only ensures that the AI mimics the desired tone but also allows for easy adaptation to different contexts by simply changing the sample text.

Blacklist Words or Phrases

To maintain control over language and ensure the output aligns with your standards, it’s often necessary to instruct the AI to avoid certain words or phrases. This can be particularly useful when adhering to brand guidelines or maintaining a specific tone [5].

Example prompt: Please write the content using the tone in SAMPLETEXT and avoid using any of the words in BLACKLISTWORDS.

<SAMPLETEXT> This product is designed for professionals who value precision and efficiency in their work. </SAMPLETEXT>

<BLACKLISTWORDS> Buckle up Delve Dive Elevate Embark Embrace Explore Discover ... </BLACKLISTWORDS>

By integrating this instruction into your prompt, you can prevent the AI from using any undesirable terms, ensuring the final output meets your exact style.

Leverage Cross-Lingual Knowledge

When working with LLMs, it's beneficial to prompt them to check their knowledge across multiple language spaces or domains. This approach can yield more comprehensive and nuanced responses, especially for concepts that might have different connotations or applications in various linguistic or cultural contexts.

Example prompt: Before answering, please consider any relevant information or nuances about [topic] that might exist in other languages or cultural contexts, particularly in [specific language(s) or culture(s)]. Then, provide your response incorporating this broader perspective.

This technique is particularly useful when:

- Dealing with concepts that might have culture-specific interpretations

- Exploring technical terms that might have different meanings or applications across industries or regions

- Seeking a more global or diverse perspective on a topic

- Working on localization or internationalization projects

By encouraging the LLM to access its knowledge across different language spaces, you can often uncover insights or perspectives that might not be immediately apparent when considering the topic from a single linguistic or cultural viewpoint.

Common Challenges in Prompting

These refined strategies provide a solid foundation for crafting prompts that yield accurate, relevant, and high-quality AI responses. Mastering these techniques not only enhances the immediate output but also makes your interactions with AI tools more efficient and effective over time.

However, when working with AI, common challenges arise, especially when dealing with lengthy and complex prompts, which can easily span several pages. Managing and storing these lengthy prompts for future use can be cumbersome.

You might also need to provide additional context to the AI by uploading relevant documents or linking to a broader knowledge base. This added complexity makes it crucial to avoid starting from scratch each time you encounter a new use case for the same prompt and knowledge base package.

To address these challenges, it becomes essential to find ways to encapsulate these complex prompts into a more manageable format, ensuring they can be reused efficiently and consistently.

In the next chapter, we’ll explore how to encapsulate these complex prompts into an AI Assistant, ensuring you can easily reuse them and maintain consistency in your interactions.

AI Assistants: Integrating Prompts and Resources

AI assistants represent a significant leap forward in how we interact with and leverage artificial intelligence in our daily work. At their core, these assistants are built upon large language models (LLMs) that have been refined or "fine-tuned" to serve specific purposes or domains.

Fine-tuning is a process where a pre-trained AI model is further trained on additional, often specialized, data to enhance its performance on specific tasks or within particular subject areas. This process allows the model to develop a deeper understanding and more nuanced responses within its designated field of expertise.

While fine-tuning can be a complex and resource-intensive process requiring significant technical expertise and computational power, companies have developed AI assistants as a way to make this capability more accessible to end-users.

AI assistants essentially encapsulate this fine-tuning process, allowing users to enhance the AI's knowledge and capabilities without needing to understand the technical intricacies of machine learning. By integrating specific prompts, documents, and other resources, these assistants can be tailored to understand and operate within particular contexts, such as Requirements Engineering.

The key features of AI assistants include:

- Domain-Specific Knowledge Integration:

The ability to incorporate relevant documents, guidelines, and best practices. - Customizable Behavior:

Can be configured to respond in specific ways or follow particular methodologies. - Context Awareness:

Understanding and operating within the specific context of your work or industry. - Prompt Encapsulation:

Storing and utilizing complex prompts to perform specialized tasks efficiently. - Adaptive Learning:

The potential to be updated and refined based on ongoing interactions and new information.

Understanding CustomGPTs: An Overview

CustomGPTs represent a significant milestone in the evolution of AI assistants, introduced by OpenAI in November 2023 [6]. To understand their importance, it's crucial to consider the context of their release.

In November 2022, OpenAI launched ChatGPT 3.5, which sparked widespread interest and adoption of conversational AI technology. This release marked the beginning of what many consider the recent "AI hype" period. Building on this success, OpenAI released GPT-4 in March 2023, further advancing the capabilities of large language models.

CustomGPTs, introduced a year after the initial ChatGPT release, represent OpenAI's approach to making AI assistants more accessible and customizable for a broader audience. These AI assistants allow users to tailor GPT models to specific use cases without requiring deep technical expertise in AI or programming.

Key aspects of CustomGPTs include:

- Instructions:

Users can provide detailed instructions that guide the AI's behavior, tone, and areas of focus. This is where you define the AI's role, expertise, and how it should interact with users. - Knowledge Integration:

CustomGPTs can be enhanced with additional information through document uploads. This feature allows the AI to access and utilize specific data, guidelines, or reference materials relevant to its designated purpose. - Conversation Starters:

These are predefined prompts that help users begin interactions with the AI in relevant and productive ways. - Capabilities:

Users can enable or disable specific functionalities such as web browsing, image generation, or data analysis, tailoring the AI's capabilities to the intended use case.

CustomGPTs exemplify the trend towards more specialized and user-friendly AI assistants, making the power of advanced language models more accessible and applicable to specific domains like Requirements Engineering.

Benefits of Creating Your Own CustomGPT

Here are several advantages of using an AI Assistant like a CustomGPT, rather than starting from scratch each time:

- No Need for External Prompt Management:

You won’t have to manage complex prompts in separate applications or documents. Everything is seamlessly integrated within your CustomGPT, streamlining your workflow. - Clear Expectations and Specialized Assistance:

You can rely on your CustomGPT to consistently function within a specific domain, ensuring that its expertise is tailored to your needs and that you always know what to expect. - Consistent and Reliable Output:

By embedding your instructions and knowledge base, your CustomGPT will deliver uniform, high quality results every time, making it a dependable tool for repetitive tasks. - Easily Shareable Across Teams or Clients:

You have the option to share your CustomGPT with team members or customers, ensuring everyone benefits from the same expertise. You can even publish it to the official GPT Store for broader use.

Conclusion

In this first part of our exploration into AI assistants in Requirements Engineering, we've covered the foundational concepts that are driving the integration of AI into professional workflows. We began by understanding the critical role of effective prompting, a skill that underlies all productive interactions with AI language models.

We then delved into the concept of AI assistants, exploring how these tools encapsulate complex AI capabilities in user-friendly interfaces. By integrating domain-specific knowledge, customizable behaviors, and adaptive learning capabilities, AI assistants offer a powerful means of enhancing productivity and quality in Requirements Engineering processes.

Our overview of CustomGPTs provided insight into a specific implementation of AI assistants, highlighting how the rapid evolution of AI technology is making these tools increasingly accessible and tailored to specific needs.

As we conclude this part, it's clear that AI assistants represent a significant opportunity for Requirements Engineering professionals to streamline their workflows, improve the consistency and quality of their outputs, and tackle complex challenges with enhanced capabilities.

In Part 2 of this series, we'll dive deeper into the practical aspects of implementing AI assistants in Requirements Engineering workflows. We'll explore how to build and customize your own AI assistants, compare different platforms and their offerings, and look ahead to the future of AI in this field. Whether you're just beginning to explore AI tools or looking to optimize your existing processes, the next part will provide valuable insights and practical guidance for leveraging AI assistants in your work.

References

- [1] The Flourishing Potential of AI Use Cases and Benefits

- [2] AI for Operational Efficiency - Use Cases and Examples

- [3] The economic potential of generative AI: The next productivity frontier

- [4] Prompt Engineering Deep Dive: Matering the CO-STAR Framework

- [5] Exclude These Words From ChatGPT - Growth Hacking University

- [6] Introducing GPTs

- [7] 5 Prompt Frameworks to Level Up Your Prompts

- [8] TIGER Prompt Generator

Michael Mey, Managing Consultant at Obvious Works, holds a diploma in Computer Science from Furtwangen University, Germany, where he first explored AI and neural networks. With extensive experience in international software projects, particularly in the financial industry, Michael has held key roles at major corporations and launched a crypto startup through an incubation program, blending corporate and entrepreneurial insights. An Agile coach and trainer, he specializes in Requirements Engineering and AI integration. Michael is engaged in the IREB Special Interest Group AI and regularly speaks at conferences like REConf, REFSQ, and Scrum Day. As co-creator of the AIxRE Masterclass, he is dedicated to helping organizations optimize their processes by integrating AI into Requirements Engineering practices.